This tutorial introduces how to define a trainable workflow.

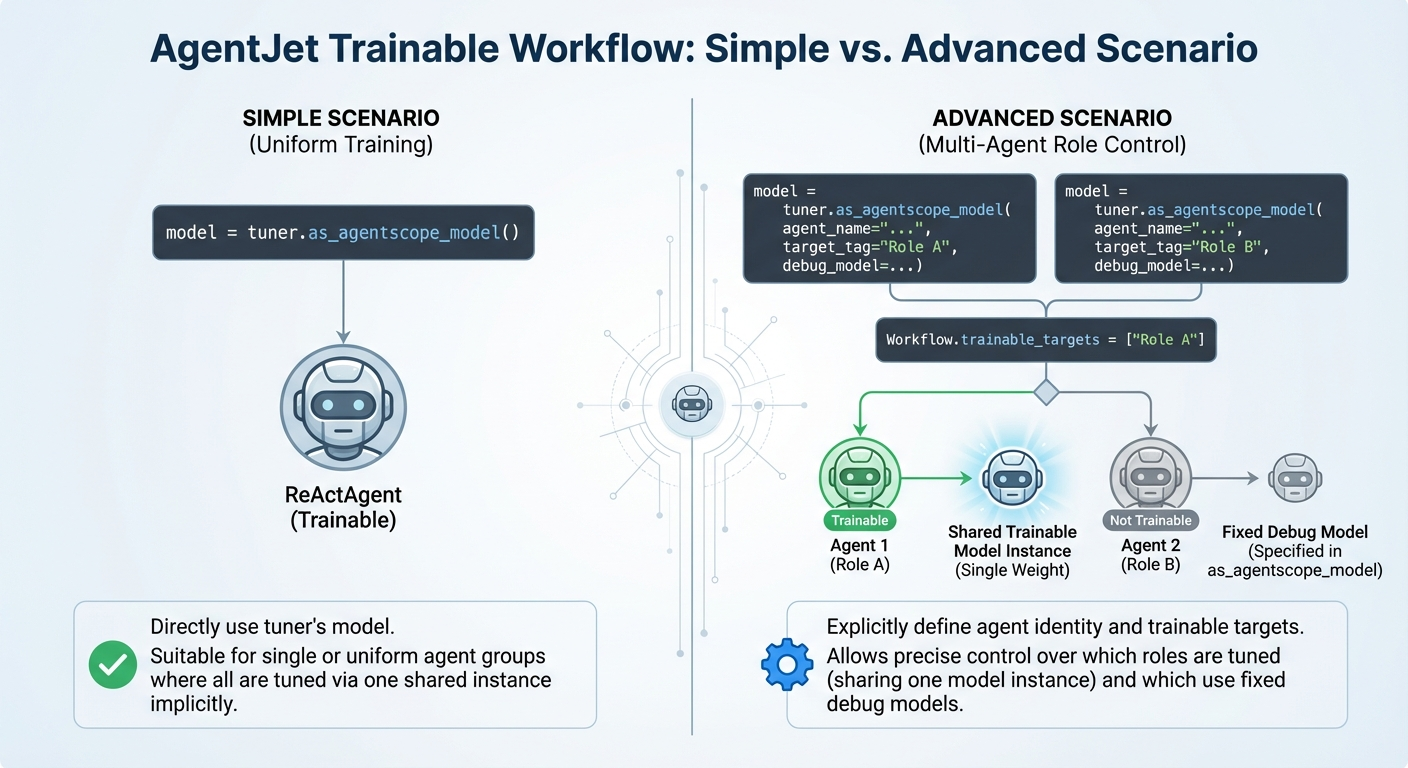

AgentJet provides two convenient and mutually compatible ways to wrap your Workflow:

- Simple: Emphasizes simplicity, ease of use, and readability

- Advanced: Emphasizes flexibility, controllability, and extensibility

In this article we use AgentScope framework for demonstration. For other frameworks (OpenAI SDK, Langchain, HTTP Requests), please follow the same pattern.

Simple Practice

Simple Practice Abstract

- Simply set

modelargument in AgentScope ReActAgent argument totuner.as_agentscope_model()when initializing your agent. - Wrap your code with

class MyWorkflow(Workflow)and your agent is ready to be tuned.

1. When to Use This Simple Practice

Choose Simple Practice If You...

Know exactly which agents should be trained, or the number of agents is small

Already finished basic debugging of your workflow

Do not need to change which agents are trained on the fly

2. Convert Your Workflow to AgentJet Trainable Workflow

The very first step is to create a class as a container to wrap your code:

Next, use the tuner argument, call its tuner.as_agentscope_model() method:

AjetTuner

AjetTuner also has .as_raw_openai_sdk_client() and .as_oai_baseurl_apikey() method. But .as_agentscope_model() is more convenient for AgentScope agent workflow.

3. Code Example

Math Agent

Training a math agent that can write Python code to solve mathematical problems.

Learning to Ask

Learning to ask questions like a doctor for medical consultation scenarios.

Countdown Game

Writing a countdown game using AgentScope and solving it with RL.

Frozen Lake

Solving a frozen lake walking puzzle using AgentJet's reinforcement learning.

Advanced Practice

Advanced Practice Abstract

- The

tuner.as_agentscope_model()function has hidden parameters, please further complete them to tell AgentJet the identity of agents. - The

ajet.Workflowclass has hidden attributetrainable_targets, please assign it manually to narrow down agents to be tuned.

1. When to Use Advanced Practice

When designing a multi-agent collaborative workflow where each agent plays a different target_tag, AgentJet provides enhanced training and debugging capabilities.

Multi-Agent Benefits

With a multi-agent setup, you can:

Precisely control which agents are fine-tuned

Explicitly define the default model for agents not being trained

Switch trainable targets on the fly without modifying source code

1. How to promote to advanced agent scenario:

Simple, there are only two more issues that should be take care of in addition:

i. .as_agentscope_model has three hidden (optional) parameters, complete them for each agent.

| parameter | explanation |

|---|---|

agent_name |

The name of this agent |

target_tag |

A tag that mark the agent category |

debug_model |

The model used when this agent is not being tuned |

model_for_an_agent = tuner.as_agentscope_model(

agent_name="AgentFriday", # the name of this agent

target_tag="Agent_Type_1", # `target_tag in self.trainable_targets` means we train this agent, otherwise we do not train this agent.

debug_model=OpenAIChatModel(

model_name="Qwen/Qwen3-235B-A22B-Instruct-2507",

stream=False,

api_key="api_key",

), # the model used when this agent is not in `self.trainable_targets`

)

ii. Workflow has a hidden (optional) attribute called trainable_targets, config it.

trainable_targets value |

explanation |

|---|---|

trainable_targets = None |

All agents using as_agentscope_model will be trained |

trainable_targets = ["Agent_Type_1", "Agent_Type_2"] |

Agents with target_tag=Agent_Type_1, target_tag=Agent_Type_2, ... will be trained |

trainable_targets = [] |

Illegal, no agents are trained |

| Scenario | Model Used |

|---|---|

target_tag in trainable_targets |

Trainable model |

target_tag NOT in trainable_targets |

Registered debug_model |

Warning

Regardless of target_tag differences, all agents share a single model instance (one model weight to play different roles, the model receives different perceptions when playing different roles).

2. Multi-Agent Example

Here's a complete example with multiple agent roles (Werewolves game):

class ExampleWerewolves(Workflow):

trainable_targets: List[str] | None = Field(default=["werewolf"], description="List of agents to be fine-tuned.")

async def execute(self, workflow_task: WorkflowTask, tuner: AjetTuner) -> WorkflowOutput:

# ensure trainable targets is legal

assert self.trainable_targets is not None, "trainable_targets cannot be None in ExampleWerewolves (because we want to demonstrate a explicit multi-agent case)."

# bad guys and good guys cannot be trained simultaneously

# (because mix-cooperation-competition MARL needs too many advanced techniques to be displayed here)

if "werewolf" in self.trainable_targets:

assert len(self.trainable_targets) == 1, "Cannot train hostile roles simultaneously."

else:

assert len(self.trainable_targets) != 0, "No trainable targets specified."

# make and shuffle roles (fix random seed for reproducibility)

roles = ["werewolf"] * 3 + ["villager"] * 3 + ["seer", "witch", "hunter"]

task_id = workflow_task.task.metadata["random_number"]

np.random.seed(int(task_id))

np.random.shuffle(roles)

# initialize agents

players = []

for i, role in enumerate(roles):

default_model = OpenAIChatModel(

model_name="Qwen/Qwen3-235B-A22B-Instruct-2507",

stream=False,

api_key="no_api_key",

)

model_for_this_agent = tuner.as_agentscope_model(

agent_name=f"Player{i + 1}", # the name of this agent

target_tag=role, # `target_tag in self.trainable_targets` means we train this agent, otherwise we do not train this agent.

debug_model=default_model, # the model used when this agent is not in `self.trainable_targets`

)

agent = ReActAgent(

name=f"Player{i + 1}",

sys_prompt=get_official_agent_prompt(f"Player{i + 1}"),

model=model_for_this_agent,

formatter=DashScopeMultiAgentFormatter()

if role in self.trainable_targets

else OpenAIMultiAgentFormatter(),

max_iters=3 if role in self.trainable_targets else 5,

)

# agent.set_console_output_enabled(False)

players += [agent]

# reward condition

try:

good_guy_win = await werewolves_game(players, roles)

raw_reward = 0

is_success = False

if (good_guy_win and self.trainable_targets[0] != "werewolf") or (

not good_guy_win and self.trainable_targets[0] == "werewolf"

):

raw_reward = 1

is_success = True

logger.warning(f"Raw reward: {raw_reward}")

logger.warning(f"Is success: {is_success}")

except BadGuyException as e:

logger.bind(exception=True).exception(

f"Error during game execution. Game cannot continue, whatever the cause, let's punish trainable agents (Although they maybe innocent)."

)

raw_reward = -0.1

is_success = False

except Exception as e:

logger.bind(exception=True).exception(

f"Error during game execution. Game cannot continue, whatever the cause, let's punish trainable agents (Although they maybe innocent)."

)

raw_reward = -0.1

is_success = False

return WorkflowOutput(reward=raw_reward, is_success=is_success)

Configuration Flexibility

In this example:

roledescribes an agent's in-game identity (werewolf, villager, etc.)chosen_modeldefines the default model when the role is not being trained- You can flexibly switch training targets by modifying

trainable_targets

Swarm

Wrapping and training your agent on a machine without GPU.

Working in progress and coming soon.